28th Jan (Tue) |

29th Jan (Wed) |

|

9:50 |

Opening Remark |

|

10:00 |

Shunichi Amari * |

Susanne Still * (Remote Talk) |

11:00 |

Martin Bieh * |

Larissa Albantakis * (Remote Talk) |

12:00 |

lunch + poster |

lunch + poster |

13:00 |

Sosuke Ito |

Christoph Salge * |

14:00 |

Hideaki Shimazaki * |

Pedro Mediano * |

15:00 |

coffee + poster |

coffee + poster |

16:00 |

Takuya Isomura |

Fernando Rosas * |

17:00 |

Masatoshi Yoshida |

Something Interesting |

* broadcast and recorded

Shun-ichi Amari

Geometry of randomly connected deep neural networks

It is a pleasant surprise in information theory that random codes are efficient codes. Randomness plays a nice role. Similarly, randomly connected deep neural networks have lots of nice properties. We focus on the following fact: When the width of a network is sufficiently large, any target function is realized in a close neighborhood of any randomly connected deep network. This proved in the theory of neural tangent kernel, but the proof is complicated. We give a simple geometrical proof. The proof is supported by a high-dimensinal magic that projection of a shpere to a low-dimensional subspace shrinks drastically. This elucidate the the geometry of the parameter space of a deep networks.

Susanne Still

Thermodynamic efficiency and predictive inference

Learning and adaptive information processing are central to living systems and agents. There has been a long debate about whether these phenomena are driven by underlying physical principles, and what these are. Meanwhile, the study of these phenomena has led to machine learning. Both artificial, as well as biological learners have to make inferences to predict relevant quantities from available data. The relative costs and benefits of more or less complicated memories have to be weighed against each other, but how precisely should a general strategy be designed? Much of machine learning uses philosophical postulates, such as Ockham's razor. But all information processing is physical, and another view is that learning can be understood as a process that is optimal under physical criteria. In this talk, I will highlight an approach that focuses on studying fundamental physical limits to information processing.

Over 150 years ago, Maxwell opened up a discussion about the interrelatedness of information and work with a thought experiment that is often referred to as "Maxwell's demon". Interest in this subject has spiked over the last decade as experimental demonstrations of Szilard's information engine and Landauer's limit have become feasible. Until recently, the discussion was largely limited to ultimate thermodynamic bounds which can only be reached without restrictions on a system. But real world agents typically have certain generic restrictions, for example, they run at finite rates, are limited to a size range, and are restricted in what they can sense and do. Interestingly, when the scope is widened, a connection emerges between thermodynamic efficiency and predictive inference: the efficiency of generalized information engines depends on their ability to filter out predictive information and discard irrelevant bits. This is enticing, as it allows for the derivation of a concrete machine learning method, the Information Bottleneck, from a physical argument, and beyond that points to a possible organizational principle. Similarly, modern concepts from non-equilibrium thermodynamics can be used to put information theory on a solid physical footing. The generalized Information Bottleneck framework contains learning from finite data, dynamical and interactive learning, as well as a generalization to quantum systems, and may provide a physically well grounded starting point for thinking about agency in the real world.

Over 150 years ago, Maxwell opened up a discussion about the interrelatedness of information and work with a thought experiment that is often referred to as "Maxwell's demon". Interest in this subject has spiked over the last decade as experimental demonstrations of Szilard's information engine and Landauer's limit have become feasible. Until recently, the discussion was largely limited to ultimate thermodynamic bounds which can only be reached without restrictions on a system. But real world agents typically have certain generic restrictions, for example, they run at finite rates, are limited to a size range, and are restricted in what they can sense and do. Interestingly, when the scope is widened, a connection emerges between thermodynamic efficiency and predictive inference: the efficiency of generalized information engines depends on their ability to filter out predictive information and discard irrelevant bits. This is enticing, as it allows for the derivation of a concrete machine learning method, the Information Bottleneck, from a physical argument, and beyond that points to a possible organizational principle. Similarly, modern concepts from non-equilibrium thermodynamics can be used to put information theory on a solid physical footing. The generalized Information Bottleneck framework contains learning from finite data, dynamical and interactive learning, as well as a generalization to quantum systems, and may provide a physically well grounded starting point for thinking about agency in the real world.

Sosuke Ito

The Fisher information and the entropy production

The entropy production is a measure of irreversibility in thermodynamics, and the Fisher information is a measure of the estimation in information theory. Recently, the unified theory of thermodynamics and information theory has been intensively discussed, and it reveals several relationships between two measures, the entropy production and the Fisher information. I would like to review this relationship in this talk.

Hideaki Shimazaki

The brain as an information-theoretic engine: A new paradigm for quantifying perceptual capacity of neural dynamics

Neurophysiological studies on early visual cortices revealed that an initial feedforward-sweep of neural response depends only on stimulus features whereas perceptual effect such as awareness and attention is represented as modulation of the late component (e.g., ~100 ms after the stimulus onset). The delayed modulation is presumably mediated by feedback connections from higher brain regions. Psychophysical experiments on humans using visual masking or transcranial magnetic stimulation showed that selective disruption of the late component vanishes conscious experiences of the stimulus. Here I provide a unified computational and statistical view on the modulation of sensory representation by internal dynamics in the brain, which provides a way to quantify the perceptual capacity of neural dynamics.

A key computation is the gain modulation that represents integration of multiples signals by nonlinear devices (neurons). The gain modulation is ubiquitously observed in nervous systems as a mechanism to adapt nonlinear response functions to stimulus distributions. It will be shown that the Bayesian view of the brain provides a statistical paradigm for the gain modulation as a way to integrate an observed stimulus with prior knowledge. Furthermore, the delayed gain-modulation of the stimulus response via recurrent connections is modeled as a dynamic process of the Bayesian inference that combines the observation and prior with time-delay. Interestingly, it will be shown that this process is mathematically equivalent to a heat engine in thermodynamics. This view provides us to quantify the amount of the delayed gain modulation and its efficiency in terms of the entropy of neural activity. I will show how we can quantify the perceptual capacity from spike data using the state-space Ising model of neural populations, which we have been developing in the past 10 years.

A key computation is the gain modulation that represents integration of multiples signals by nonlinear devices (neurons). The gain modulation is ubiquitously observed in nervous systems as a mechanism to adapt nonlinear response functions to stimulus distributions. It will be shown that the Bayesian view of the brain provides a statistical paradigm for the gain modulation as a way to integrate an observed stimulus with prior knowledge. Furthermore, the delayed gain-modulation of the stimulus response via recurrent connections is modeled as a dynamic process of the Bayesian inference that combines the observation and prior with time-delay. Interestingly, it will be shown that this process is mathematically equivalent to a heat engine in thermodynamics. This view provides us to quantify the amount of the delayed gain modulation and its efficiency in terms of the entropy of neural activity. I will show how we can quantify the perceptual capacity from spike data using the state-space Ising model of neural populations, which we have been developing in the past 10 years.

Takuya Isomura

Reverse engineering approach to characterize cost functions and generative models for neural networks

Recently, it is widely recognized that variational free energy minimization can provide a unified mathematical formulation of inference and learning processes. When provided with a discrete state space environment, a class of biologically plausible cost functions for neural networks – where the same cost function is minimized by both neural activity and plasticity – can be cast as variational free energy under an implicit generative model. This equivalence suggests that any neural network minimizing its cost function implicitly performs variational Bayesian inference. We reported that in vitro neural networks that receive input stimuli generated from hidden sources perform causal inference or source separation by minimizing variational free energy, as predicted by the theory. These results suggest that our reverse engineering approach is potentially useful to formulate the neuronal mechanism underlying inference and learning in terms of Bayesian statistics.

Masatoshi Yoshida

Enactivism and free-energy principle

Our retinas have high acuity only in the fovea, meaning that we have to construct our visual scene by constantly moving our eyes. Thus, vision is not a passive formation of representation, but active sampling of visual information by agent’s action. Sensorimotor enactivism (SME) extends this idea and proposes that seeing is an exploratory activity mediated by the agent’s mastery of sensorimotor contingencies (i.e., by practical grasp of the way sensory stimulation varies as the perceiver moves). However, SME lacks formal, empirically testable theories or models. Here we argue that Free-energy principle (FEP) may serve as a computational model of SME. In FEP, agents interacting environments with their sensors minimize the variational free-energy (VFE) defined by the approximated inference q(x) about the hidden states (x) of the environment and the generative model, the joint probability distribution p(x,s) that describes how the agent’s sensor (s) interact with the environment (x). In this framework, representationalist idea such as making internal representation or unconscious inference can be interpreted as having the approximated inference q(x). On the other hand, SME can be interpreted as having the generative model p(x,s). Since minimization of VFE requires both q(x) and p(x,s), adaptive behavior such as vision may require continuous matching between q(x) and p(x,s). Thus, FEP may help to integrate representationalistic and enactive views to make the theory of vision. We will also argue that structure of conscious experience informed by Husserlian phenomenology, which is one of the origins of enactive view, constrains the possible theory of conscious experience.

Martin Bieh

TBD

Larissa Albantakis

Agency and Autonomy within the causal framework of Integrated Information Theory (IIT)

By definition, an agent must be an open system that dynamically and informationally interacts with an environment. This simple requirement, however, immediately poses a methodological problem: When subsystems within a larger system are characterized by physical, but also biological, or informational properties, their boundaries are typically taken for granted and assumed as given. How can we distinguish the agent from its environment in objective terms? In addition, agents are supposed to act upon their environment in an autonomous manner. But can we formally distinguish autonomous actions from mere reflexes?

Here, I will address these two issues in light of the causal framework of integrated information theory, which provides the tools to reveal causal borders within larger systems and to identify the causes of an agent's actions (or, e.g., the output of an image classifier). Finally, I will discuss the notion of functional equivalence (e.g., between a human and a computer simulating the human's behavior) and argue that implementation matters for determining agency and autonomy.

Here, I will address these two issues in light of the causal framework of integrated information theory, which provides the tools to reveal causal borders within larger systems and to identify the causes of an agent's actions (or, e.g., the output of an image classifier). Finally, I will discuss the notion of functional equivalence (e.g., between a human and a computer simulating the human's behavior) and argue that implementation matters for determining agency and autonomy.

Christoph Salge

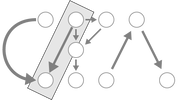

Coupled Empowerment Maximization

- From Single Agent Intrinsic Motivation to Social Intrinsic Motivation

- From Single Agent Intrinsic Motivation to Social Intrinsic Motivation

Can we use the single agent intrinsic motivation of empowerment to define generic, multi agent behaviour? I will first introduce empowerment, an information-theoretic formalism that measures how much an agent is in control of its own perceivable future. Everything else being equal, one should keep their options open. After briefly discussing the possible relationship to agency itself, I will show simulation results to demonstrate some of empowerment properties – namely its ability to deal with and react appropriately to changing embodiments, and how it can even restructure its own environment. The focus of the talk will then shift to coupled empowerment maximisation, where a combination of different empowerment perspectives is used to design generic companion and antagonist behaviour. After demonstrating this in a few, game-based settings, I will detail the opportunities and challenges with this approach. One being the importance of a forward model when dealing with other agents. The other being the relationship between relevancy and the information decomposition of one’s sensor input.

Pedro Mediano

Beyond integrated information: Information dynamics in multivariate dynamical systems

Research on foundational questions concerning topics like agency, consciousness, and self-organisation typically focuses on the study of functional organisation -- how the interactions between different components of a system give rise to rich information dynamics. Two prominent frameworks to study information dynamics in complex systems are the Partial Information Decomposition (PID) framework, and Integrated Information Theory (IIT), both extensions of Shannon's theory of information. In this talk we will present a novel tool in information dynamics, Integrated Information Decomposition (ΦID), that results from the unification of IIT and PID. We show how ΦID paves the way for more detailed analyses of interdependencies in multivariate time series, and sheds light on collective modes of information dynamics that have not been previously reported. Additionally, ΦID reveals that what is typically referred to as ‘integration’ is actually an aggregate of several heterogeneous phenomena. Finally, to ground the concepts of PID and ΦID, we present a novel measure of synergy with a concrete operational meaning, that can be readily extended to form what is, to the best of our knowledge, the first operational measure of integrated information. Together, these two contributions provide valuable tools to formulate and test theories of emergence, agency, and self-organisation in complex systems.

Fernando Rosas

Reconciling emergences: An information-theoretic approach to identify causal emergence in multivariate data

"The notion of emergence is at the core of many of the most challenging open scientific questions, being so much a cause of wonder as a perennial source of philosophical headaches. Two classes of emergent phenomena are usually distinguished: strong emergence, which corresponds to supervenient properties with irreducible causal power; and weak emergence, which are properties generated by the lower levels in such ""complicated"" ways that they can only be derived by exhaustive simulation. While weak emergence is generally accepted, a large portion of the scientific community considers causal emergence to be either impossible, logically inconsistent, or scientifically irrelevant.

In this talk we present a novel, quantitative framework that assesses emergence by studying the high-order interactions of the system's dynamics. By leveraging the Integrated Information Decomposition (ΦID) framework [1], our approach distinguishes two types of emergent phenomena: downward causation, where macroscopic variables determine the future of microscopic degrees of freedom; and causal decoupling, where macroscopic variables influence other macroscopic variables without affecting their corresponding microscopic constituents. Our framework also provides practical tools that are applicable on a range of scenarios of practical interest, enabling to test -- and possibly reject -- hypotheses about emergence in a data-driven fashion. We illustrate our findings by discussing minimal examples of emergent behaviour, and present a few case studies of systems with emergent dynamics, including Conway’s Game of Life, neural population coding, and flocking models.

[1] Mediano, Pedro AM, Fernando Rosas, Robin L. Carhart-Harris, Anil K. Seth, and Adam B. Barrett. ""Beyond integrated information: A taxonomy of information dynamics phenomena."" arXiv preprint arXiv:1909.02297 (2019)."

In this talk we present a novel, quantitative framework that assesses emergence by studying the high-order interactions of the system's dynamics. By leveraging the Integrated Information Decomposition (ΦID) framework [1], our approach distinguishes two types of emergent phenomena: downward causation, where macroscopic variables determine the future of microscopic degrees of freedom; and causal decoupling, where macroscopic variables influence other macroscopic variables without affecting their corresponding microscopic constituents. Our framework also provides practical tools that are applicable on a range of scenarios of practical interest, enabling to test -- and possibly reject -- hypotheses about emergence in a data-driven fashion. We illustrate our findings by discussing minimal examples of emergent behaviour, and present a few case studies of systems with emergent dynamics, including Conway’s Game of Life, neural population coding, and flocking models.

[1] Mediano, Pedro AM, Fernando Rosas, Robin L. Carhart-Harris, Anil K. Seth, and Adam B. Barrett. ""Beyond integrated information: A taxonomy of information dynamics phenomena."" arXiv preprint arXiv:1909.02297 (2019)."